Originally posted on July 15, 2015 @ 11:10 AM

Do You Believe in Good and Bad Luck?

First published in Sept 2012

Soon it will be the football final and Melbourne Cup season again and millions of Australians will prove to each other that they believe in good and bad luck. There will be arguments about odds and probabilities, bank notes will exchange hands and the odd drop of wine will pass between friends. I have already lost a bottle of wine on my favourite team last weekend and it was only a semi final. In Australian culture if you say you believe in your team or horse, you have to demonstrate that belief by backing it with a bet. If you won’t bet on your team or your horse, you are determined to be ‘full of it’.

However, when it comes to betting on anything human or animal, there is no predictability and there are heaps of bookies and sports betting agencies who will relieve you of your money if you want to find out about predictability. It doesn’t matter if your team has been the best all year or if your horse is Black Caviar, there will be odds available for you to test your belief. Bookies and bet agencies know, like insurance companies that, nothing is predictable. With humans and animals, fallible beings, there are too many social, psychological and environmental variables.

The world expert in probability and risk is Daniel Kahneman, Nobel prize winner and founder of Prospect Theory. His recent book Thinking Fast and Slow is a must read for anyone in the area of risk, safety or security. Kahneman writes in his book about ‘regression to the mean’.

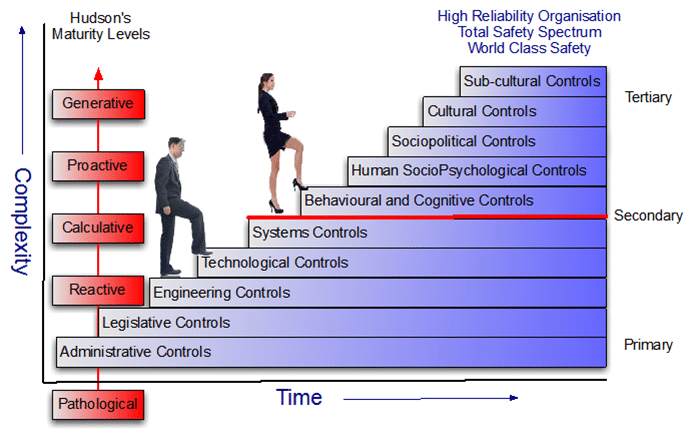

Failure to appreciate ‘regression toward the mean’ is common in calculative organizational cultures (Hudson’s model of safety maturity) and often leads to incorrect interpretations and conclusions of lag indicator data.

Kahneman was attempting to teach flight instructors that praise is more effective than punishment. He was challenged by one of the instructors who relayed that in his experience praising a cadet for executing a clean manoeuvre is typically followed by a lesser performance, whereas screaming at a cadet for bad execution is typically followed by improved performance. This, of course, is exactly what would be expected based on regression toward the mean. A pilot’s performance, although based on considerable skill, will vary randomly from manoeuvre to manoeuvre. When a pilot executes an extremely clean manoeuvre, it is likely that he or she had a bit of luck in their favour in addition to their considerable skill. After the praise but not because of it, the luck component will probably disappear and the performance will be lower. Similarly, a poor performance is likely to be partly due to bad luck. After the criticism but not because of it, the next performance will likely be better.

Kahneman (2011, p. 176) comments:

The discovery I made on that day was that the flight instructors were trapped in an unfortunate con tangency: because they punished cadets when performance was poor, they were mostly rewarded by subsequent improvement, even if the punishment was actually ineffective. Furthermore, the instructors were not alone in that predicament. I had stumbled onto a significant fact of the human condition: the feedback to which life exposes us is perverse. Because we tend to be nice to other people when they please us and nasty when they do not, we are statistically punished for being nice and rewarded for being nasty.

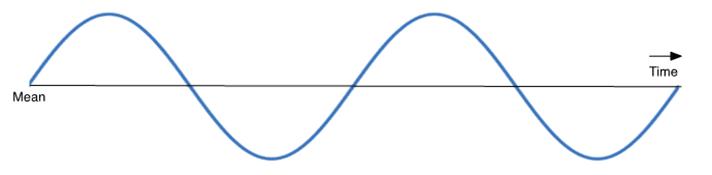

To drive this point home, Kahneman had each instructor perform a task in which a coin was tossed at a target twice. He demonstrated that the performance of those who had done the best the first time deteriorated, whereas the performance of those who had done the worst improved. This is illustrated in Figure 1. Regression to the Mean:

Figure 22. Regression to the Mean

Calculative organisations regularly attribute competence to what is little more than luck and circumstance. What often happens is that such an organizations gets shocked by a series of poor lag indicators (under the assumption that they indicate safety culture performance) or a serious event and call in a consultant, often a Behavioural Based Safety (BBS) expert. This is what follows: a program is undertaken, lag indicator records improve and everyone parades the results at conferences charging success to the effectiveness of the program. The moment the calculative organisation achieves this new perceived standard, they establish this as a new mean and then set target reductions based upon the best result.

The organisation in subsequent years then develops a problem. If the newly establishes mean is not the actual mean they then become disappointed by regression to the real mean, rather than critique themselves or the arbitrary mean they attributed. Some organisations that are more reactive and pathological in nature (to use Hudson’s descriptors) then manipulate the data, redefine risk and/or injury to maintain the delusion of improvement.

Lag data can be so easily manipulated that it becomes meaningless. Wagner’s research included interviews with twenty CEOs of the Australia’s largest companies, Wagner (2010) states:

Most CEOs no longer relied on the Lost Time Injury Frequency Rate (LTIFR) and OHS audits as primary performance measures. All reported some difficulty in measuring the effectiveness of their programs and most were exploring lead measures.

The idea that lag indicators are a measure of performance is based upon the ideas of Herbert Heinrich (1931) and similar approaches to incident prediction, causation and mechanistic approaches to understanding risk. Heinrich was an insurance salesman and sought to impose a scientific approach to an understanding of risk. Heinrich’s Safety Pryamid whilst popular in the safety industry has no validity either as a predictive or explanatory tool of how humans and organisations manage risk. There is no evidence to show that the ideas of Heinrich or that of later Behavioural-Based Safety (BBS) disciples, equate to reality or explain socialpsychological or neuropsychological evidence about human judgment and decision making.

Heinrich’s Safety Pyramid is present in the discourse of most calculative organisations, it is a mechanistic paradigm that is appealing mostly to engineers and professions that adore quantitative measurement. However one tries to impose a measureable and predictive paradigm to humans, one will be greatly disappointed.

The High Reliability Organisation (HRO) was first introduced by Prof K. E. Weick in 1999. A HRO is not fixated on mechanistic approaches to risk but rather understands the nature of humans to be far less predictive and know that claims to be able to ‘tame the unexpected’, ‘zero harm’ or ‘all accidents are preventable’ are delusional. Rather, the HRO knows that with humans and risk, one never arrives but journeys on in a state of ‘chronic unease’ (again Hudson’s term) indeed, any claim to have arrived demonstrates that one is calculative rather than generative. With this in mind, let’s have a look at three important facts, based upon the calculative assumption that lag data represents safety culture performance.

1. It was announced this week that Queensland has the second highest fatality rate of any jurisdiction (http://www.safeworkaustralia.gov.au/sites/SWA/AboutSafeWorkAustralia/WhatWeDo/Publications/Pages/NotifiedFatalitiesMonthlyReport.aspx).

2. Over 200 organisations are registered with the state as zero harm leadership organisations (http://www.deir.qld.gov.au/workplace/publications/safe/feb12/zero-harm/index.htm).

3. Queensland has been a ‘zero harm’ state now for nearly 10 years, just imagine how bad things would be if the mantra of zero was not guiding their thinking.

So, how does an organisation become a HRO or a ‘generative’ organisation? It all depends on what the organisation thinks and pays attention to. The diagram at Figure 2. Human Dymensions Risk and Safety Matrix shows what kinds of domains that need to be on the radar of an organisation that desires to be ‘generative’. Hudson’s model has been overlaid on my model to show the correspondence. Unless an organisation is prepared to begin thinking and influencing above the red line, it will never become a HRO, it will never be generative. Indeed, the fixation on everything zero and calculative will simply hold it back.

So here’s the challenge for the betting season. Predict a winner and put some money on it, with any luck you might end up with zero.

Figure 2. Human Dymensions Risk and Safety Matrix

References

Hudson, P., (1999) The Human Factor in System Reliability – Is Human Performance Predictable? RTO HFM Workshop, Siena Italy.

Kahneman, D., (2011) Thinking Fast and Slow Farrar, Straus and Giroux, New York.

Wagner, P., (2010) Safety – A Wicked Problem, Leading CEOs discuss their views on OHS transformation. SIA, Melbourne.

Weick, K., (2001) Making Sense of the Organisation Vol. 1. Blackwell, London.

Weick, K., (2001b) Making Sense of the Organisation Vol. 2. Blackwell, Oxford.

Do you have any thoughts? Please share them below