Understanding The Social Psychology of Risk And Safety

The article is a bit longer than usual and if you would prefer to download a copy for future reference then you can DOWNLOAD HERE: Understanding-the-Social-psychology-of-Risk-and-Safety.docx

In response to a number of emails I have received and conversations I’ve been having (and sadly some very ignorant comments posted on LinkedIn) I thought it would be a great idea to republish this excellent summary of the principles by Dr Rob Long.

Understandably, given how the same old systems and techniques have become so firmly entrenched, a number of people have become a tad bewildered about what his particular approach to risk and safety is all about. Rob has written a brief academic style paper which I have found extremely helpful and I hope you do as well. I particularly like the comparative table at the end of the article and shown below on this page, puts a few of the approaches side by side and shows what makes each approach tick.

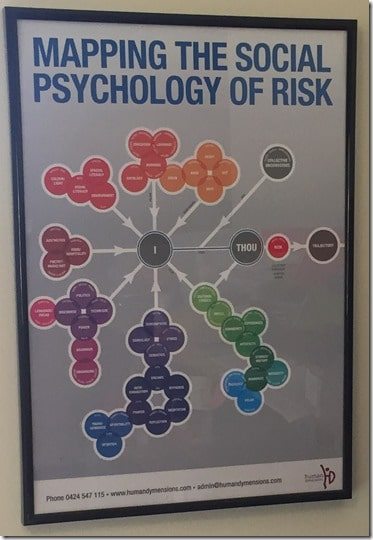

Rob has produced a map of the body of knowledge on this subject and I have this on the wall above my desk (pictured) – this has really helped me to get my head around it all (more info on social psychology map here)

Dr Rob Long has published a number really interesting articles on the topic (see them here).

The Social Psychology of Risk And Safety

Social psychology is the study of the nature and causes of human social behavior, with an emphasis on how people think towards each other and how they relate to each other. As the mind is the axis around which social behavior pivots, social psychologists tend to study the relationship between mind(s) and social behaviors. Social psychology is also the scientific study of how people’s thoughts, feelings, and behaviors can be influenced by actual, imagined, or the implied presence of others.

In 1908 William McDougall published Social Psychology, and Floyd Allport published a book by the same title in 1924. It was Allport’s book that sent social psychologists, as distinct from psychologists, off into a wave of experiments to see how individuals were influenced by social arrangements. For a comprehensive look at a history of experiments with people see Abelson, R., Frey, K., and Gregg, A., (2004) Experiments with People: Revelations from Social Psychology. Lawrence Erlbaum Associates Publishers, London. Research exploded in social psychology in the late 1920s and 1930s further supported by Gardner Murphy’s Experimental Social Psychology and Carl Murchinson’s Handbook in Social Psychology.

Robert Caldini (Caldini, R., (2009) Influence: Science and Practice. Pearson. Boston) describes how people are influenced and persuaded by social arrangements and identified six underlying social dynamics that affect human judgment and decision making. Caldini’s six ‘weapons of persuasion’ are:

- Reciprocation. Anthropologists consider reciprocity to be a universal social norm.

- Commitment to Consistency. According to Festinger (1957) people are reluctant to behave in ways that are inconsistent with their public commitments.

- Social Proof. If we see many other people doing something, we are more likely to do it. The psychology of mass movements is foundational for understanding cults, ‘group think’, the authoritarian personality, gambling and risk, eugenics, xenophobia and host of social movements/sub cultures in society.

- Authority. If someone is recognised as being in authority we are more likely to do it. The experiments and work of Stanley Milgram (Obedience to Authority) demonstrated this.

- Liking. People are more likely to be persuaded if they feel liked.

- Scarcity. When we perceive something as scarce we are more likely to but it, and make the most of the opportunity.

The ‘father’ of social psychology is sometimes identified as Kurt Lewin. In a 1947 article, Lewin coined the term ‘group dynamics’. He described this notion as the way that groups and individuals act and react to changing circumstances. Lewin theorized that when a group is established it becomes a unified system with unique dynamics that cannot be understood by evaluating members individually. This idea quickly gained support from sociologists and psychologists who understood the significance of this emerging field.

Styles and Streams in Risk and Safety

A range of philosophical and anthropological perspectives have emerged in a number of ‘streams’ in the risk and safety industry. Each stream reveals different anthropological, sociological and psychological assumptions about humans, organisations and material. Each of these streams and styles is compared in Appendix 1. A Comparison of Risk and Safety Streams and Styles. The A Comparison of Risk and Safety Streams and Styles. serves to show what a social psychology of risk and safety considers in its response to human judgment and decision making about risk and safety.

When risk and safety people often debate with each other about what to do about risk, they generally debate from a range of assumptions about what it is to be an educated and functioning human in an organisation/society.

The reality is, we are greatly affected by what happens around us when it comes to assessing and managing risk. The main finding that we learn from social psychology is that conformity, obedience and social perception are all tied to context and situation, much more powerfully than to character. When we attribute how people make sense of risk to personality, intelligence or ‘common sense’, social psychologists label this as ‘fundamental attribution error’ that is, humans tend to overestimate the importance and power of individual personality and underestimate the influence of social situations.

The following discussion helps explain some of the fundamental principles and issues that social psychology brings to the understanding, assessment and management of risk and safety.

Belief Congruence

Belief congruence is a foundational idea behind a number of explanations of influence, controlling and non-compliant behaviours. Belief systems are important anchoring points for individuals and identity with groups. Congruence is therefore rewarding and attractive, negative congruence produces negative attitudes. Belief congruence is understood by social psychologists to explain the attraction of prejudice, discrimination and a range of means of differentiation in social identity. Crowd behaviour and dissent from crowd behaviour are explained by the attraction of group and in-group dynamics.

Bounded Rationality

First by Herbert Simon (1978) bounded rationality is the idea that in decision-making, rationality of individuals is limited by the information they have, the cognitive limitations of their minds, and the finite amount of time they have to make a decision. The truth is humans are limited by what our mind and social constructs can manage. Humans have to make decisions without all possible information available.

Bystander Effect

Recent studies of the Abu Ghraib incident in Iraq (American soldiers tortured prisoners) confirm many of the findings of social psychology regarding the way we tend to behave in groups. Most of us either conform or passively accept the status quo when under group pressure. Rosenhan (1973) in one experiment, admitted a group of mentally healthy and well researchers (anonymously) into a psychiatric hospital and no-one could convince authorities that they were not mental patients. One of the researchers was kept there for 7 weeks because hospital staff interpreted everything he did as confirmation of his mental illness.

Extensive research into what became known as Kitty Genovese Syndrome or the ‘Bystander Effect’ shows that people make sense of risk differently if they are on their own or in a group. This research followed the brutal murder of Kitty Genovese on March 13 1964, Kitty was stabbed to death 30 metres from her home in Kew Gardens, New York City. She cried for help, and the attacker drove away returning a second time and stabbing her again. There were dozens of witnesses who both heard and saw the event and yet none of them responded. Following the event there was public outrage at the ‘apathy’ of the 38 witnesses, the lack of response didn’t make sense. However, the work of social psychologists shows that we change our behaviour if we are in a large group, because it creates a diffusion of responsibility that is, if others do nothing we identify with them, not the victim. We tend to look around and if others don’t assess the situation like us we tend to doubt our own perception.

If you want to assess risks at work, the most effective tool is a low level conversation with no more than 2 or 3 others. The factors or Bystander Effect and Groupthink is so strong in large groups that it makes any sense of having properly assessed risk or any dependence on communication of risk highly unreliable.

Cognitive Bias

A cognitive bias is a pattern of deviation in judgment. Individuals create their own ‘subjective social reality’ from their perception of their engagement with others in groups and organisations. There are more than 250 cognitive biases, effects and heuristics that affect the judgment and decision making of humans (http://en.wikipedia.org/wiki/List_of_biases_in_judgment_and_decision_making). Most biases and effects are socially conditioned.

Some of the most common cognitive biases are:

· Abilene Paradox: Organisations frequently take actions in contradiction to what they really want to do and therefore defeat the very purposes they are trying to achieve … the inability to manage agreement is a major source of organisation dysfunction.

· Anchoring or focalism – the tendency to rely too heavily, or ‘anchor’, on a past reference or on one trait or piece of information when making decisions.

· Availability heuristic – the tendency to overestimate the likelihood of events with greater ‘availability’ in memory, which can be influenced by how recent the memories are, or how unusual or emotionally charged they may be.

· Dunning–Kruger effect an effect in which incompetent people fail to realise they are incompetent because they lack the skill to distinguish between competence and incompetence.

· Fundamental attribution error – the tendency for people to over-emphasize personality-based explanations for behaviors observed in others while under-emphasizing the role and power of situational influences on the same behavior (see also actor-observer bias, group attribution error, positivity effect, and negativity effect)

· Gambler’s fallacy – the tendency to think that future probabilities are altered by past events, when in reality they are unchanged. Results from an erroneous conceptualization of the law of large numbers. For example, ‘I’ve flipped heads with this coin five times consecutively, so the chance of tails coming out on the sixth flip is much greater than heads.’

· Hindsight bias – sometimes called the ‘I-knew-it-all-along’ effect, the tendency to see past events as being predictable at the time those events happened. Colloquially referred to as “Hindsight is 20/20”.

· Hot-hand fallacy – The “hot-hand fallacy” (also known as the “hot hand phenomenon” or “hot hand”) is the fallacious belief that a person who has experienced success has a greater chance of further success in additional attempts.

· Primacy effect, Recency effect & Serial position effect: that items near the end of a list are the easiest to recall, followed by the items at the beginning of a list; items in the middle are the least likely to be remembered

· Sunk Cost Effect: When we have put effort into something, we are often reluctant to pull out because of the loss that we will make, even if continued refusal to jump ship will lead to even more loss. The potential dissonance of accepting that we made a mistake acts to keep us in blind hope.

Cognitive Dissonance

Developed by Leon Festinger (Festinger, L. (1957. ) A Theory of Cognitive Dissonance. Stanford University Press, Stanford, California), cognitive dissonance refers to the mental gymnastics required to maintain consistency in the light of contradicting evidence. An understanding of cognitive dissonance is essential if one wants to understand conversion. Cognitive dissonance explains the attempts made to alleviate the feeling of self-criticism and discomfort caused by the appearance of the conflicting beliefs. The idea that compliance forces, power, punishment, incentives and other behaviourist methods ‘convert’ people from ‘unsafety’ to safety is naïve. Such belief denies all that has been learned from the psychology of addictions, psychology of conversion, psychology of fundamentalisms, psychology of abuse, cults and religions, suicide ideation and psychology of goals (Moskowitz, G., and Grant, H., (eds.) (2009) The Psychology of Goals .The Guilford Press, New York.).

In many ways televangelists and safety officers share something in common except televangelists are much better at it. They just have a different view of what it means to ‘save lives’. There is not space here to emphasise or map the dynamics of cognitive dissonance and its relevance to safety, I undertake a more detailed description of this in my book.

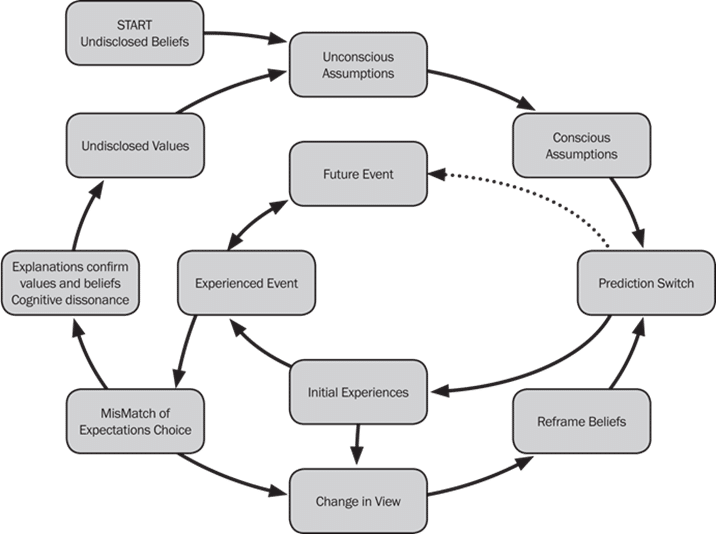

The cognitive dissonance cycle begins as individuals form unconscious and conscious anticipations and assumptions, which serve as predictions about future events. Subsequently, individuals experience events that may be discrepant from their prediction. Discrepant events, or surprises, trigger a need for explanation, or post-diction, and, correspondingly, for a process through which interpretations of discrepancies are developed. Interpretation, or meaning, is attributed to these surprises.

So it is that people construct frameworks in order to explain, understand and comprehend the stimuli which surround them. When they experience stimuli which does not fit into that framework or cognitive map they experience a sense of cognitive dissonance and causes them to either reframe their thinking or make the stimuli fit their thinking. Sometimes people are able to think through the most amazing cognitive gymnastics to justify a strongly held belief. A study of cults or mass movements is a good place to start.

One of the driving interests in risk and safety is the demand for compliance. The study of cognitive dissonance provides an excellent framework for understanding why compliance is not always achieved in the risk and safety industry. The following diagram, Figure 1. The Cognitive Dissonance Cycle helps explain how cognitive dissonance operates.

Figure 1. The Cognitive Dissonance Cycle

Discourse Analysis

Attributed to Leo Spitzer, Jurgen Habermas and Michael Foucault. Discourse analysis is concerned with the transmission of power in systems of thoughts composed of ideas, symbols, artifacts, attitudes, courses of action, beliefs and practices that systematically construct the subjects and the worlds of which they speak. For example: the language of safety is so important for the construction of meaning for organisations. For example: the language of ‘zero’ in safety constructs mindsets preoccupied with reductionism, minimalism and control. The language of BBS constructs a focus on behaviour-only approaches to safety.

Dogmatism-Fundamentalism

Following the work of Adorno et. Al. on the authoritarian personality, Rokeach (1948, 1960) developed a theory regarding right-wing dogmatism and fundamentalism. Rokeach argued for a more generalised syndrome of intolerance based on closed-mindedness. It is characterised by isolation of contradictory belief systems, resistance to change in the light of evidence and appeals to authority to justify existing beliefs.

Framing, Pitching, Priming and Language

One of the foundations of social psychology is the idea of priming. Priming is anything that prepares and shapes decision making. The stimulus for priming can be anything from environment, tactile stimulation, text, language, semantics, space, place or group dynamics. For example: if you play the child’s game of making a person spell shop, hop, top, plop and flop, then ask them to answer quickly: what do you do when you see a green light? The person says ‘stop’. Many experiments have been undertaken to show how people can be primed with temperature, which is why climate even seems to make a difference in the homicide rate.

Professor John Bargh has been the pioneer in this process and has shown that negative and positive primes can influence decision making, especially in how one attends to risk. The work of Amos Tversky and Daniel Kahneman (1974) in Prospect Theory shows that negative primes tend to increase risk taking.

The use of language is important in the study in social psychology and risk and safety. This is why the repetition of words and phrases that prime ‘dumb down’ thinking and poorly defined actions is important eg. the use of phrases such as ‘common sense’, ‘can do’, ‘get the job done’, ‘whatever it takes’ and so on.

Heuristics

Amos Tversky and Daniel Kahneman (1974) were the first to propose that decision makers use ‘heuristics’ or ‘rules of thumb’, to arrive at their judgments. The advantage of heuristics is that they reduce the time and effort required to make decisions and judgments. It is easier to estimate how likely an outcome will be rather than engage in a long and tedious rational process. In most cases rough approximations are sufficient. The idea of heuristics is raised in Standards Australia Handbook 327: 2010 Communicating and Consulting about Risk. The handbook it states (2010, p. 12):

Heuristics are judgmental rules or ‘rules of thumb’ shortcuts that people use to help gauge situations and help them to make decisions. Three of the most influential shortcuts used when people evaluate risk are ‘availability’, representativeness’ and ‘anchoring and adjustment’.

The Handbook also states (2010, p. 13):

Heuristics are valid risk assessment tools in some circumstances and can lead to “good” estimates of statistical risk in situations where risks are well known. In other cases, where little is actually known about a risk, large and persistent biases may give rise to fears that have no provable foundation; conversely, such as for risk associated with foodborne diseases, inadequate attention may be given to issues that should be of genuine concern.

Although limitations and biases can be easily demonstrated, it is not valid to label heuristics as “irrational” since in most everyday situations, rule-of-thumb judgements provide an effective and efficient approach for estimating risk levels. It’s not unusual for specialists to also rely on heuristics when they have to apply judgment or rely on intuition.

But heuristics often leads to overconfidence. Both lay people and specialists place considerable (sometimes unjustified) faith in judgments reached by using heuristics. In particular, “awareness” of a hazard does not imply any other knowledge than that the hazard exists, but people may be tempted to pass judgment and make decisions based on this alone.

Understanding how heuristics affect decisions is critical in developing learning and response in the assessment and management of risk and safety.

Implicit (Tacit) Knowledge

Implicit (tacit) knowledge was first introduced by Michael Polyani in 1958 (Polanyi, M., (1962) Personal Knowledge: Towards a Post-Critical Philosophy. University of Chicago Press, Chicago) and describes knowledge that is not explicit. Explicit knowledge can be written down, explained and shared whereas, implicit knowledge is sometimes not even known to the user until it is enacted. Implicit knowledge is sometimes known as ‘gut’ knowledge and explains the kind of knowledge that is developed in the unconscious by experience and intuition over time. Much of our decision making comes from out tacit knowledge. This was explained in Malcolm Gladwell’s book Blink as well as by others like Klein (Klein, G., (2003) The Power of Intuition. Doubleday, New York.; (1998) Sources of Power, How People Make Decisions, MIT, New York) and Plous (Plous, S., (1993) The Psychology of Judgment and Decision Making. McGraw Hill, New York).

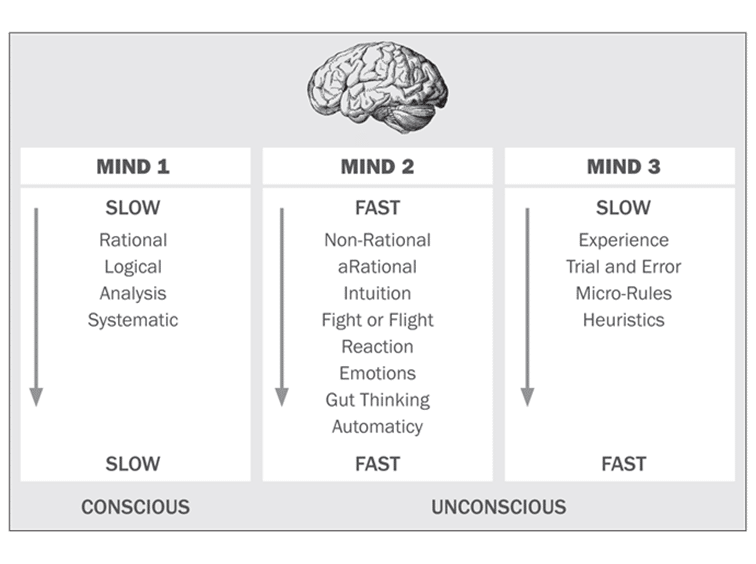

There are a number of important connections between the idea of implicit (tacit) knowledge and the enactment of the unconscious. The kind of decision making that uses intuition is said to be non-rational or arational. Non-rational decision making is not irrational but rather works in a whole new dimension of the mind that may not engage the rational (slow) mind. This has been explains by Kahneman (Kahneman, D., (2011) Thinking Fast and Slow. Farrar, Straus and Giroux, New York). It is from the unconscious and intuition that a great deal of fast thinking and enactment comes. This is where heuristics (mental micro-rules and shortcuts) originate.

One of the best books to read on implicit decision making is by Klein (Klein, G., (2003) The Power of Intuition. Doubleday, New York). Intuition is the way we translate our experiences into action. It is why learning by experience is an important mode of learning. Intuition is not a bias that needs to be suppressed nor magic but rather, it is a non-rational mode of thinking that needs to be better understood.

Intrinsic Motivation

What are the key drivers of human behaviour, particularly considering groups and organisations? What are the motives which drive human action, thinking, judgment and decision making? A useful acronym to help emember the 6 major motives and drivers of human psychosocial action is BUCCET. BUCCET stands for:

· Belonging – people first and foremost need to belong, isolation and rejection are major turn offs to humans. People need to be in relationship in order to survive and thrive. It is from belonging that we develop and establish identity.

· Understanding – people need “to know”, this helps them adapt and predict the fundamentals of living. When we know we can construct our reality, attraction and better establish our belonging.

· Control – when we belong and understand we then learn to control and manage ourselves, our environment and others in the world. This is how we make sense of self in position to others and out environment.

· Communication – the need to engage, interact, connect and attract and reject others is founded on the basics of communication, language and discourse.

· Effacing Self – people need to more than just belong, they need to feel special, through self-esteem, self-improvement and self-sympathy. Self-enhancing also explains aspects of attraction, attribution, attitudes, helping, aggression and social influence.

· Trust – when we trust we can adapt better to the world and others, and with effective communication, cooperate and interact with others. This builds mutual altruism and group loyalty.

These are the fundamental motives and key to grasping what motivates and de-motivates others. The social psychology of leadership suggests that getting the context right first is the key to motivation, create an environment where these fundamentals are fostered.

The study of intrinsic motivation was put on the map by Albert Bandura (Bandura, A. (1977). Social Learning Theory. Englewood Cliffs, NJ: Prentice Hall. – See more at: http://www.simplypsychology.org/bandura.html#sthash.XDxP6IdS.dpuf) and his work on social learning theory. There are three core concepts at the heart of social learning theory. First is the idea that people can learn through observation. Next is the idea that internal mental states are an essential part of this process. Finally, this theory recognizes that just because something has been learned, it does not mean that it will result in a change in behavior. Bandura demonstrated the effectiveness of his theory through the ‘bobo doll experiment’ (http://www.youtube.com/watch?v=hHHdovKHDNU).

An excellent book on intrinsic motivation is Deci, E., (1995) Why We Do What We Do, Understanding Self-Motivation. Penguin, New York.

Learning and Styles of Learning

The role of learning in risk and safety is the fulcrum on which everything is balanced. Any theory of risk and safety that excludes knowledge or definition about learning is incomplete. One of the best ways to judge the effectiveness of an organisations focus on safety and risk is to see if the word ‘learning’ appears anywhere or prominently in their discourse. There are many organisations that talk about ‘zero’ but never use the word ‘learning’ when discussing risk and safety. Some companies have even substituted the word ‘zero’ for safety and so ‘prime’ their population by not even using the word ‘safety’ when talking about risk.

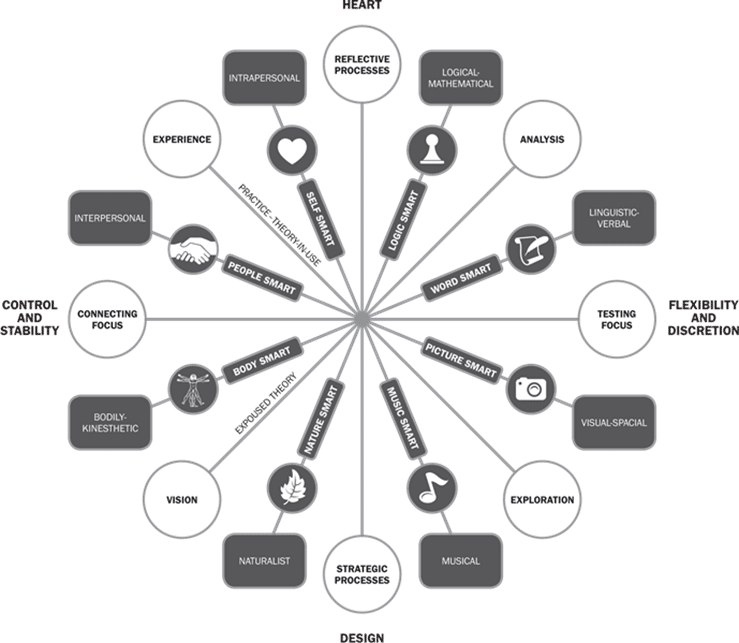

In 1983 Howard Gardner released Frames of Mind and shook the established world of schools, education and learning by proposing that humans have eight or more ‘learning intelligences’. Gardner’s work shows that even the way we conduct inductions and training in risk and safety doesn’t ensure learning. The eight learning intelligences are represented graphically in Figure 2. The Eight Learning Intelligences. The fact is, people learn differently and learning effectiveness varies according to learning intelligence. This is why some people learn much better by doing than by theorising. Unless the organisations embrace the concepts of learning, motivation and the perception of risk in their approach to safety, their focus will remain fixated on systems, regulation and the physicality of risk. The idea of safety ownership will remain foreign to their organisation.

Figure 2. The Eight Learning Intelligences.

Reciprocal Determinism: postulated by social cognitive theorist Albert Bandura. Reciprocal determinism states: that the situation people find themselves in will influence both their behaviour and their attitudes. People’s behaviour will influence both their attitudes and the situation, and that people’s attitudes will influence their perceptions of a situation and, in turn influence their behaviour.

Risk Homeostasis: developed by Gerald Wilde (Wilde, G., (2001) Target Risk 2. PDE Publications, 2001). Risk homeostasis holds that everyone has his or her own fixed level of acceptable risk. The famous Berlin Taxi Experiment first conducted by Wilde in 1981 demonstrates the idea of ‘risk compensation’. What this means is that people adjust their response to safety technologies. Safety technologies are not neutral but are interpreted. It is possible that some safety technologies increase rather than reduce risk. This is because humans tend to resist external controls and prefer to ‘own’ their decisions. The current thirst in society for ‘edgework’ exemplified in ‘X-games’ is evidence of risk homeostasis. Further see: Zinn, J., (ed) (2008) Social Theories of Risk and Uncertainty, An Introduction. Blackwell, London.

The Authoritarian Personality (TAP)

The authoritarian personality (TAP) is a personality type of an individual who puts his or her value in strength and leadership, and believes that those who are not like-minded or in agreement are simply weak. An individual with this type of personality is often unwavering and critical, with a superstitious and unfailing belief that a power larger than him or herself is governing fate. During the mid-1940s, researchers first developed theories that racism is also an inherent part of an authoritarian personality.

The Authoritarian Personality was written by Theodor W. Adorno, Else Frenkel-Brunswik, Daniel Levinson, and Nevitt Sanford, researchers working at the University of California, Berkeley, during and shortly after World War II. Adorno et. Al. developed a set of criteria by which to define personality traits, ranked these traits and their intensity in any given person on what it called the ‘F scale’ (F for fascist).’ The personality type Adorno et al. identified can be defined by nine traits that were believed to cluster together as the result of childhood experiences. These traits include conventionalism, authoritarian submission, authoritarian aggression, anti-intellectualism, anti-intraception, superstition and stereotypy, power and ‘toughness’, destructiveness and cynicism, projectivity, and exaggerated concerns over sex.

TAP (and the work of Milgram) helps explain why the Nazis in World War II were able to be so systematic, efficient and calculated in their extermination of the Jews. TAP also helps explain the dynamics of xenophobia and eugenics.

The Perception of Risk

All risk involves a degree of uncertainty and subjective attribution. Paul Slovic (Slovic, P., (2000) The Perception of Risk. Earthscan, London. Slovic, P., (2010) The Feeling of Risk: New Perspectives on Risk Perception. Earthscan, London) has shown that perception of risk varies according to life experience, cognitive bias, heuristics, memory, visual and special literacy, expertise, attribution and anchoring. Slovic uncovered three basicv dimensions connected to public perceptions of risk, these are:

- Dread risk: a perceived lack of control, dread or catastrophic potential, fatal consequences and inequitable distribution of risks and benefits.

- Unknown risks: judged as unobservable, unknown and new and delayed in their manifestation of harm.

- Level of exposure: this refers to the number of people that can be harmed at one time.

Humans tend to attribute greater risk (aggravated risk) when a higher number of people can be harmed in a shorter period of time. People tend to mitigate risk when the risk is unknown or delayed over time with fewer people exposed to the risk.

The Unconscious and Enactment

Championed by John Bargh. (Bargh, J. A., (ed.) (2007) Social Psychology and the Unconscious: The Automaticity of Higher Mental Processes. Psychology Press, New York; Hassin, R., Uleman, J., and Bargh, J., (2005) The New Unconscious. Oxford University Press, London). Bargh shows that many of our decisions and judgements are ‘primed’ by the anchoring of words or social context. This idea of automaticity (autopilot) is also supported by social psychologists of risk: Slovic, Plous, Sunstein and Gardner.

There are strong connections between what has been discovered by Bargh and discourse analysis. For this reason safety culture programs need to take much greater care with safety communications, language, words and symbols.

Prof. Karl E. Weick introduces the idea of enactment in his work. (Weick, K., (1979) The Social Psychology of Organizing. McGraw Hill, New York. Weick, K., (1995) Sensemaking in Organisations. Sage Publications, London. Weick, K., and Sutcliffe, K., (2001a) Jossey-Bass, San Francisco. Weick, K., (2001) Making Sense of the Organisation Vol. 1. Blackwell, London. Weick, K., (2001b) Making Sense of the Organisation Vol. 2. Blackwell, Oxford. Wilde, G., (2001) Target Risk 2. PDE Publications, 2001). Weick’s work on ‘sensemaking ‘and ‘collective mindfulness’ are important aspects of the social psychology of risk.

The Weick concept of Mindfulness should not be confused with the Buddhist concept of Mindfulness advocated by Kabat Zinn. Just as sensemaking is much more than just making sense of something, so too mindfulness is more than just being mindful.

The following qualities explain mindfulness and how people cope with the problems of external adaptation (integration with culture and environment) and internal integration (consistency with self and values). An examination of how these seven qualities develop debunks the notion that sensemaking is somehow shared or common.

For Weick, mindfulness is the key to making sense of risk in the workplace. Weick’s (2001) research into High Reliability Organisations (HRO) establishes five key qualities needed to manage risk mindfully, these are:

· Preoccupation with failure;

· Reluctance to simplify interpretations;

· Sensitivity to operations;

· Commitment to resilience and;

· Deference to expertise.

One is therefore mindful if these five qualities are activated. These qualities are based on Weick’s research into risk management in nuclear power plants and on aircraft carriers.

Karl E. Weick discusses the essential tools and filters we use to make sense of information, these are:

· Self Esteem: Your own confidence in yourself, personal identity and what you think of yourself in relation to others will affect the way you interpret information.

· History: Your past story, from where you were born and lived to what got you to where you are. All things in your personal history have some influence in what you know and how you interpret the present.

· Social Context: Where you are in relation to others, what is happening around you, the nature of those around you and the way they relate to the same information all influence the way you interpret information.

· Confirming Evidence: We act something into belief, even creating a bias in our minds so that when something happens it confirms the belief. For example, if we rev up our own car in response to the hot car full of young men mentioned earlier, we enact a new scenario which may confirm or disconfirm what we believe. If we hold our finger up or tactically ignore their behaviour, each act brings into being a new act. Something new changes the sense of what is happening.

· Cues and Indicators: What we see, hear and feel doesn’t necessarily carry information with it. We recognise indicators and cues which give us information similar to things we have experienced before. We recognise the importance of the revving motor and know it means power, provocation and aggression. All information is subjective and interpreted.

· Believability: Isn’t it peculiar that when something unexpected happens we express surprise, amazement and disbelief? Our capacity to imagine is directly linked to not only what we believe but also to what we are willing to believe. Our ability to imagine extends or limits our ability to make sense of things. Believability is an important part of prediction, and combines with past experience and cues to help us imagine what is possible. If we don’t think something is possible, we don’t plan for it and certainly can’t imagine the risks associated with it. We now know a tsunami can kill 250,000 people, we now know in Australia that a bushfire can kill 250 people and we now know that an earthquake and tsunami can put a country into nuclear crisis. Such evidence changes the way we interpret new information.

· Flow: The final tool we use to make sense of things is flow. The pace and speed of events affects the way we interpret them. Much of what we sense goes quickly to our subconscious and triggers a rapid intuitive response. Our intuition or gut feeling bypasses the need to process things step by step in a slow logical pattern. Our intuition gives us the ‘flight or fight’ response we need in a crisis.

So much of what we decide is ‘enacted’ by the unconscious. In other words we do things without ‘thinking’. This doesn’t mean we do things that are ‘irrational’ but rather non-rational (aRational). The enactment of behavior from our unconscious or implicit knowledge enables us to manage the complexities of life without having to stop and analyse everything every moment. The use of intuition, autopilot and heuristics is critical in the shaping of behavior and decisions in social psychology. These comes from minds two and three in the brain as illustrated in the Figure 3. One Brain Three Minds.

Figure 3. One Brain Three Minds

Conclusion

Much more could be discussed about these and other social psychological influences on human judgement and decision making. There is much more to learn about why some orthodox safety programs and initiatives don’t work. However, social psychology is no silver bullet, it just helps explain why there are no silver bullets but it extends the journey. Once we get our heads out of silver bullets and begin to be realistic about human judgement and decision making, then we may better able to make sense of risk, broaden our approaches to its understanding, analysis and management.

Appendix 1 – A Comparison of Risk and Safety Streams and Styles

Download the document here for ease of reading: [download id=”84664″]

Note: This comparison is not intended to limit each stream or style to itself. Some approaches to risk and safety build on other styles and combine aspects of more than one style.

| Orthodox Legal | SafetyScience | Behavioural- Based Safety | Zero Harm | Process-Based Safety | People-Based Safety | Psychosocial Safety | Social Psych Safety | |

| View of Humans | Human as servant | Human as object | Human as machine | Human as perfect | Human as part of system | Human as person | Well being drives decision making | Social relations drive decision making |

| Focus | Rules, regulations & standards | Method, order & supposed logic | Rewards, monitoring, policing | Counting, failure & compliance | Organisation, systems & glitches | Individuals, holistic safety | Well being, mental health & health | Social psychology, reltionshp & neuropsychology |

| Origins and Foundations | Robens, Brooks, Bruntland | Taylorism, Heinrich, Bird, Difford | Skinner, DuPont, McSween, López-Mena, | Broken Window Theory (Wilson and Kelling)DuPont | Reason, Hopkins, Sunstein, Dekker, Petersen, Hollnagel | Geller, Reason, Thomas | Judith Erickson, Dollard, Newman, Cara & MacRae | Bandura, Weick, Plous, Slovic, Maslow, Long |

| Language | Compliance, rules, punishment, control, consequence, systems, checklist, ALARP, Reasonable Practicable | Hazards, barrier, prevention, controls, consequence | Behaviour, prevention, extrinsic, reward, punishment | ‘all accidents are preventable’, aspiration, target, failure | Systemic error-failure, precedence, incubation, systems, methods | Human error, due diligence, | Health, workplace, relationships, mental health, well being, work life balance | Risks, intrinsic motivation, heuristics, learning, mind, conversation |

| View of Culture | Culture –as-systems | Culture-as-mechanics of systems | Culture-as-behaviour | Culture-as-perfection-controls | Culture-as-organisational-and leadership in systems | Culture-as-groups and leadership | Culture-as-holistic relationships | Culture as social construct |

| Strategy for Change | Increased policing and systems | Increased barriers and controls | Increased surveillance and policing behaviours | Increased punishment and promotion of failure | Increased organisational intelligence | Increased focus on values | Increased focus on holistic relationships | Increased focus on social constructs and autonomy |

| Essential Concepts | Hierarchy of control | Organisational systems | Observing and conditioning behaviours | Aspiration and target creates reality | Reforming organisations | Tuning into people factors | Improving well being and balance | Understanding and managing relationships and influences |

| Focus question | How can safety be organised? | What is the mechanics of safety? | How can people be controlled? | How many injuries would you like today? | How does the organisation affect safety? | How can people minimize human error? | How can we keep the whole person well? | How do social arrangements affect decision making? |

| Solutions | More engineering, technology, legislation and regulation | Deconstruct mechanics, bowtie and barriers | Surveillance, training, positive and negative reinforcement | Counting failure, publish failure, preach aspirations | Improve organisations and leadership | Prioritise human factors | Enhance well being and other aspects of safety will follow | Learning and engagement through social relationships and attending |

Bernard Corden says

A former Boeing Professor of Computer Science and Engineering at the University of Washington:

http://sunnyday.mit.edu/

https://www.barrons.com/articles/boeing-ceo-dennis-muilenburg-pay-51577136749

Rob Long says

Leverson is clearly focused on process safety and STEM approaches to risk.

Anja Vibe says

in your Comparison of Risk and Safety Streams and Styles were do you se proff. Nancy Leverson?