Originally posted on August 24, 2016 @ 5:05 PM

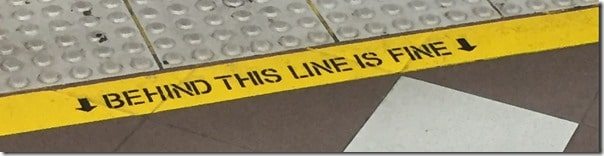

Standing on a train platform this morning I noticed that the old authoritative “STAND BEHIND THE YELLOW LINE” had been replaced with something a little more personable (see below, as opposed to ‘in front of this you get a fine’) and it reminded me of this article by Gab Carlton from a few years ago (this article generated an incredible number of comments)

Stand Behind The Yellow Line – Do Engineering Controls Affect Risk?

Standing on a busy Sydney train platform the other day got me thinking. Why do most of us conform and stand behind the yellow line? There are no signs, saying you must stand behind the yellow line for your safety, there is no one policing whether you’re standing behind the yellow line or not. There are no rules or regulations on ‘the yellow line’ and more importantly there are no inductions into these high-risk areas. So why is it that most people conform to this relatively simple ‘safety control’?

Standing on a busy Sydney train platform the other day got me thinking. Why do most of us conform and stand behind the yellow line? There are no signs, saying you must stand behind the yellow line for your safety, there is no one policing whether you’re standing behind the yellow line or not. There are no rules or regulations on ‘the yellow line’ and more importantly there are no inductions into these high-risk areas. So why is it that most people conform to this relatively simple ‘safety control’?

This photo was taken mid afternoon so not a good depiction of what this underground platform can look like in peak hour. People jammed in along the whole platform and only a couple of feet away from potential death. Because lets face it if you ‘accidently’ fell off the edge and met with an oncoming train there is no turning back.

So as I stood there contemplating the yellow line I thought why do we not have more incidents amongst this seemingly non-regulated process? People are being easily distracted by other means; phones, conversations, children, marketing posters and worst still rushing for trains and yet minimal incidents occur.

To answer this question, we can look at one theory first proposed by Gerald Wilde in 1982 on Risk Homeostasis He argued that humans change their behaviour according to the risk perceived. His controversial Berlin Taxi Experiment showed that when disc brakes were installed on the taxis the drivers were more likely to drive harder and brake later, thus there was no real improvement in accident rates. This implies that when engineering controls are used to minimize the risk humans become complacent about the risk. Yet when we take away those controls people are more alert and act accordingly.

Can we assume then that when we perceive a high risk, in this case a train platform and potential death, we become more cautious and are in fact better able to manage the risk without being ‘told’ how to act? In other words if we are ‘allowed’ to think for ourselves we can determine how dangerous a situation is and ‘manage’ our own behaviour to minimise the chance of a serious incident or injury.

This reminds me of a time in a previous role where we were having problems at a site that had two warehouses across a busy public road. Many of the workers were ‘jay walking’ between the buildings. Because we deemed it to be high risk we had to ‘fix’ it. With what we knew best at the time we used the ‘hierarchy of controls’ and determined an engineering control solution. We put a barricade (railing) in the middle of the road to direct people to cross at the lights only. This in fact created more problems because it didn’t change the behaviour as we had hoped. People still ‘jay walked’ and in fact introduced a higher risk because they were either climbing over the barricade or being forced to precariously walk along the barricade to get to the lights to then walk across. We thought we were doing the right thing but in fact had we have known about how people perceive risk and make decisions we may have not implemented such an expensive control measure that introduced a greater risk. Not a fail but a learning opportunity that not all fixes can be engineered!

This reminds me of a time in a previous role where we were having problems at a site that had two warehouses across a busy public road. Many of the workers were ‘jay walking’ between the buildings. Because we deemed it to be high risk we had to ‘fix’ it. With what we knew best at the time we used the ‘hierarchy of controls’ and determined an engineering control solution. We put a barricade (railing) in the middle of the road to direct people to cross at the lights only. This in fact created more problems because it didn’t change the behaviour as we had hoped. People still ‘jay walked’ and in fact introduced a higher risk because they were either climbing over the barricade or being forced to precariously walk along the barricade to get to the lights to then walk across. We thought we were doing the right thing but in fact had we have known about how people perceive risk and make decisions we may have not implemented such an expensive control measure that introduced a greater risk. Not a fail but a learning opportunity that not all fixes can be engineered!

So the next time when thinking of engineering controls to ‘better manage’ risk think of the ‘yellow line’ and risk homeostasis. Understand how this can influence the outcome and take this into account as well when implementing controls. Ask the question, can the proposed engineering controls actually increase or decrease the danger?

Do you have any thoughts? Please share them below