My favourite quote is in relation to racing drivers on normal roads and why they have more crashes and get more fines: “At their level of skill, driving like an average driver may be intolerably boring. Imagine being a master of Beethoven and all you are allowed to play is “Twinkle, twinkle, little star”! (Wilde, 2014, p79)”

Anyone interested in learning more about RHT can download a free copy of Wilde’s book “Target Risk 3” here: Target Risk 3 Free Download

Q: “How does the theory of risk homeostasis explain why some safety and risk initiatives go wrong?”

In the risk and safety industry, it is common to try to isolate, focus on and solve a particular problem or risk with a specific program or initiative.[1] [2] When the concept of Risk Homeostasis Theory is not considered in the development and implementation of such initiatives, then they may not always work out as planned or expected due to the subjective risk perceptions, unconscious decisions, biases and by-products associated with Risk Homeostasis Theory (Wilde, 1982).

In the risk and safety industry, it is common to try to isolate, focus on and solve a particular problem or risk with a specific program or initiative.[1] [2] When the concept of Risk Homeostasis Theory is not considered in the development and implementation of such initiatives, then they may not always work out as planned or expected due to the subjective risk perceptions, unconscious decisions, biases and by-products associated with Risk Homeostasis Theory (Wilde, 1982).

Risk Homeostasis Theory (RHT) was initially proposed by Wilde in 1982[3]. Risk Homeostasis Theory proposes that, for any activity, people accept a particular level of subjectively evaluated risk to their health and safety in order to gain from a range of benefits associated with that activity (Wilde, 2014, p11). Wilde refers to this level of accepted risk as “target level of risk” (Wilde, 2014, p31). If people subjectively perceive that the level of risk is less than acceptable then they modify their behaviour to increase their exposure to risk. Conversely, if they perceive a higher than acceptable risk they will compensate by exercising greater caution. Therefore people don’t always respond as expected to traditional safety initiatives but rather adjust their response to more rules, administrative controls, new procedures and engineering technologies according to their own target level of risk.

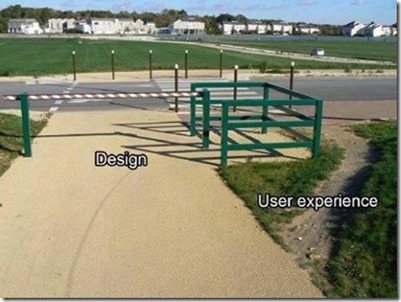

The Oxford Dictionary defines “initiative” as: “An act or strategy intended to resolve a difficulty or improve a situation; a fresh approach to something”[4]. This definition of initiative may, at first, seem to fit well in the context of orthodox safety and risk. Safety and risk problems may be identified as a result of a risk assessment or root cause analysis. Initiatives are then implemented to address the specific identified issue. What is not always appreciated is that, due to the interdependency of various systems and their components upon each other, initiatives may correct one problem but displace the problem elsewhere in another unanticipated form (Hancock and Holt, 2003). “These problems of interdependency can be referred to as messes” (Hancock and Holt, 2003). Risk Homeostasis Theory recognises the by-products and trade offs associated with implementing safety and risk initiatives in this messy environment:

The Oxford Dictionary defines “initiative” as: “An act or strategy intended to resolve a difficulty or improve a situation; a fresh approach to something”[4]. This definition of initiative may, at first, seem to fit well in the context of orthodox safety and risk. Safety and risk problems may be identified as a result of a risk assessment or root cause analysis. Initiatives are then implemented to address the specific identified issue. What is not always appreciated is that, due to the interdependency of various systems and their components upon each other, initiatives may correct one problem but displace the problem elsewhere in another unanticipated form (Hancock and Holt, 2003). “These problems of interdependency can be referred to as messes” (Hancock and Holt, 2003). Risk Homeostasis Theory recognises the by-products and trade offs associated with implementing safety and risk initiatives in this messy environment:

Reduction in one particular immediate accident cause may make room for another to become more prominent! (Wilde, 2014, p93).

The same concept is recognised by Long:

It’s difficult for some to conceive that a desired procedure, policy or behaviour can actually generate the very opposite of what is desired (Long and Long, 2012, p. 33)

In defining “go wrong” the Oxford Dictionary is not so concise. Its definition includes synonyms such as “mistake”, “failure”, “error” and “incorrect”[5]. These may be words also commonly used in orthodox risk and safety. But binary oppositional thinking,[6] for example: ‘right or wrong’, black or white’, is not useful in this context. Risk Homeostasis Theory considers the uncertainties arising from human behaviour and how people may react to safety and risk initiatives. These uncertainties in perception and judgement create a “wicked problem” (Hancock and Holt, 2003). When messes and wicked problems are combined (a wicked mess) there is no correct answer or perfect solution, so safety and risk initiatives must “look less to solve problems than to resolve tensions and realise satisfactory outcomes” (Hancock and Holt, 2003). There will always be limited time, money, resources and energy that will end the problem solving process before a perfect solution can be found. Accepting this is understood as “satisficing”. (Hancock and Holt, 2003) Therefore safety and risk initiatives cannot simply go right or wrong, there will always be trade-offs and by-products. Risk Homeostasis Theory explains not why initiatives ‘go wrong’ but why these initiatives don’t always ‘go to plan’ or achieve the expected results.

How does the safety and risk industry currently explain why initiatives do not go to plan? Whilst this inevitability generally acknowledged in current legislation as the requirement for the residual risk to be “as low as reasonably practicable” (ALARP)[7], this does not effectively explain how or why these initiatives do not go to plan. For many, the ineffectiveness of improvement initiatives is certainly counter intuitive (Long and Long, 2012, p. 33), but can still be explained logically, mechanistically and simplistically. Proponents of the unsafe acts or conditions premise may surmise more or better engineering controls should have been implemented, more resources are required or that it’s due to human error and more training is needed. According to the OHS Body of Knowledge (BoK):

Contemporary theory and research suggests that the failures that lead to incidents can be attributed to a combination of factors such as human error, inadequate design, poor maintenance, degradation of working practices, inadequate training, poor supervision and excessive working hours, which in turn are influenced by organizational and management culture. (Ruschena 2012)

Current safety legislation[8], traditional textbooks[9], training courses[10] and government authorities[11] espouse that the accepted method of eliminating or minimising hazards and risk is to consult the “hierarchy of hazard control”, where controls are simplistically ranked from most effective and reliable to the least effective and reliable. When initiatives go wrong then the premise is that one must simply return to the list and choose a more effective hazard control or perhaps a combination of controls. Ruschena (Ruschena, 2012) provides some insight into the complexities of hazard control and states that:

Approaches to control need to move beyond a simplistic application of the hierarchy of control to consider strategies required in the pre-conditions, occurrence, and consequence phases. (Ruschena, 2012)

Ruschena also suggests that failure causation is complex and better strategies should be formulated from a knowledge of barriers and defences or sociotechnical systems such as the “Swiss Cheese” model. Whilst Ruschena briefly acknowledges the need to have: “an understanding of the psychological principles that explain behaviour of workers and individuals and in groups” (Ruschena, 2012), this is still not a useful explanation of why safety and risk initiatives may not go to plan. According to Long:

If we want to make sense of risk, then we have to understand the psychological drivers which attract people to it. If we want to make sense of risk we have to understand human decision making beyond simplistically blaming stupidity, human factors or human error” (Long and Long, 2012)

The ineffectiveness of safety and risk controls may extend beyond specific hazards or individual issues and is not always immediately obvious. With the traditional narrow focus of many safety initiatives resulting from say controlling specific hazards and risks or root cause analysis of incidents, there are trade-offs and by-products for what may seem like short term success of any simplistic initiatives implemented. Long refers to these as “Band-aid Solutions” (Long, 2014, p. 103).

It is interesting that when we try to solve problems simplistically and in isolation, we create by-products that are either shifted or hidden till later……….On the surface it appears that the problem in solved yet, under the surface, a whole new problem has emerged.(Long, 2014, p. 103).

This transfer of risk and by-product effect was clearly demonstrated following the ‘September 11’[12] incident when, due to the new subjective perception of flying being an unacceptable risk, many decided to drive between cities. It was estimated that an additional 1,595 Americans died in car accidents in the year after the attacks.[13] Safety initiatives can also have a longer term or latent affect. Stemming from post September 11 cockpit controls, in the case of the recent crash of flight 9525, the security initiatives appear to have stopped the senior pilot on the plane from regaining control of the Airbus A320.[14] In explaining this concept further, Wilde refers to this as “the delta illusion” (Wilde, 2014, p12), drawing the analogy of a river delta with three delta channels. If two deltas are dammed (ie. prohibition and risk aversion) then flow is not reduced by two thirds, rather the third delta widens or another opens up. The premise being that we cannot stem the flow of water (or reduce the amount of risk) by “front end” and traditional safety controls, rather by stemming the flow further upstream. In the case of safety, interventions should be aimed at increasing desire to be safe as opposed to erecting barriers and forcing behaviour modifications as is the traditional approach. (Wilde, 2014, p12). An example of this is closing a road to traffic in order to effectively accident rate to zero. But this simply transfers cars and accidents to other roads. (Wilde, 2014, p13).

Many who espouse or are involved in the design and implementation of engineering controls often experience this “delta illusion”. Engineering controls are considered, besides elimination, to be the most effective forms of hazard control[15]. However, in attempt to reduce the opportunity for any human error or intervention, these safety and risk initiatives often do not work as expected or planned, the reasons being clearly explained by Risk Homeostasis Theory. A major component of Wilde’s studies, completed in the development of the Risk Homeostasis Theory, involved a study of the effectiveness of engineering controls. Risk Homeostasis Theory essentially evolved out of a 4 year study involving a taxi cab fleet in Munich. (Wilde, 2014, p93). Half of the cabs in the fleet were fitted with anti-lock brake systems (ABS) which prevent wheels from locking up and improve steering under hard braking and deceleration conditions. Rather than this initiative have a positive effect on accident rates, the cabs fitted with ABS brakes experienced a slightly higher accident rate. It was found that, in response to the installation of ABS brakes, drivers perceived less of an accident risk due to having more vehicle control and mechanical devices that would protect them. They reacted by accelerating faster, braking later and driving faster around corners. These findings were supported in other trials held in Canada and Norway. (Wilde, 2014, p95).

Malnaca (Malnaca, 1990), in relation to traffic safety, explains that although the fundamental government premise is that crash incidence and severity can be reduced by better road design, more safety devices, education and enforcement, Risk Homeostasis Theory proves that this premise is not entirely correct and that these initiatives lead to greater risk taking. Malnaca, in summarising the findings of the OECD in 1990, states that:

Engineering can provide an improved opportunity to be safe, education can enhance the performance skills, and enforcement can of rules against specific unsafe acts may be able to discourage people from engaging in these particular acts, but none of these interventions actually increase the desire to be safe….. The greater opportunity for safety and the increased level of skill may not be utilized for greater safety, but for more advanced performance. (Malnaca, 1990)

Another popular, yet not always effective, safety and risk initiative is training. Wilde refers to training as “intervention by education” (Wilde, 2014, p73).

It is not incompatible with RHT to propose that training could be used as a means for safety promotion, although its affects will necessarily be limited and the past record is not encouraging”. (Wilde, 2014, p73)

According to Wilde, if a person undertakes training in a new skill then initially they may exhibit an over-confidence, or ‘Hubris’,[16] in their in their ability or an underestimation of the inherent risks due to over estimating their own ability to identify and manage those risks. (Wilde, 2014, p78). Wilde quotes a 1993 study of truck drivers in Norway who attended mandatory slippery road training courses (Wilde, 2014, p. 78). Investigators found that the accident rate unexpectedly increased as a consequence and attributed this to the course not only contributing to driver ability but also to increased driver confidence, with the net effect being more accidents.

Risk Homeostasis Theory also explains risk and safety initiatives do not always turn out as expected when they involve already skilled and experienced people, particularly when those people find themselves in an over-controlled workplace. Wilde (Wilde, 2014, p79) presents the results of a US study, undertaken by Williams and O’Neill, into the on-road driving records of licensed race car drivers. They were found to be less safe than average drivers both per km driven and per person. They had more accidents and more speeding fines. Given that they took up this occupation in the first place, their target risk is likely to be greater than average but also,

At their level of skill, driving like an average driver may be intolerably boring. Imagine being a master of Beethoven and all you are allowed to play is “Twinkle, twinkle, little star”! (Wilde, 2014, p79)

A workplace example of this would be an experienced Tradesman being required to sit through a typical “sleeping bag” (Long and Long, 2012, p. 70) safety induction. Being adorned in excessive PPE and constricted, as to how he must perform the task and apply his skills. This constriction may be by safety initiatives such as 20 page generic safe work method statements (Long, 2014, p31) and subsequent safety observations and audits by those not at all familiar with his trade. The Tradesman would find this situation “intolerably boring” (Wilde, 2014, p. 79) and thus automatically fall back on “tick and flick”, (Long, 2014, p31) his own heuristics and biases developed over many years ‘on the job’ and his own subjective perception of the risks. According to Risk Homeostasis Theory, these safety initiatives do not work as planned because they do not alter the subjective perception of risk. They do not allow the Tradesman to properly discern the actual risks, alter his perceptions, address his overconfidence in his existing skill set nor provide any incentive to behave in a safer way. The Tradesman may be “lulled into an illusion of safety” (Wilde, 2014, p. 79).

Risk Homeostasis Theory clearly explains why safety and risk initiatives do not work as planned. These initiatives traditionally focus on rational thinking and mechanistic or administrative hazard and behavioral controls (or unsafe acts and conditions). Some safety and risk initiatives fail to account for the, mostly, unconscious way that humans make judgements and decisions or the way they formulate their individual subjective perceptions of risk and subsequent behaviour. Safety and risk initiatives can be counter-productive because:

People will alter their behaviour in response to the implementation of health and safety measures, but the riskiness of the way they behave will not change, unless those measures are capable of motivating people to alter the amount of risk they are willing to incur.” (Wilde, 2014, p12)

Risk Homeostasis Theory is counter intuitive to the traditional approach to health and safety (Long and Long, 2012, p. 33) which believes that when initiatives don’t work as planned then we just need more or better controls or greater vigilance. Risk Homeostasis Theory proposes that rather than more controls, sometimes less control and more motivation may be more effective. When our subjective perception of risk is greater and we are able to make our own decisions on reducing it to a level that is acceptable (target risk) then people will behave and adapt accordingly. This explains the success of initiatives such as shared pedestrian/vehicle zones.[17] Similarly, “Fun Theory” also shows that people can be more motivated to be safe through positive gains and fun[18] rather than by being “flooded with so much bureaucracy and administration” (Long, 2014, p31).

Bibliography

Hancock, D. a. (2003). Tame, Messy and Wicked Problems in Risk Management. Manchester: Manchester Metropolitan University Business School Working Paper Series (Online).

Long, R. & Long, J. (2012). Risk Makes Sense: Human Judgement and Risk. Kambah ACT: Scotoma Press.

Long, R. (2014). Real Risk – Human Discerning and Risk. Kambah ACT: Scotoma Press.

Malnaca, K. (1990). Risk Homeostasis Theory in Traffic Safety. 21st ICTCT Workshop Proceedings, Session IV 1 – 6.

Ruschena, L. (2012). Control: Prevention and Intervention. In HaSPA (Health and Safety Professionals Alliance), The Core Body of Knowledge for Generalist OHS Professionals. Tallamarine, VIC: Safety Institute of Australia.

Wilde, G.J.S. (2014). Target Risk 3 – Risk Homeostatis in Everyday Life. Toronto: PDE Publications – Digital Edition.

[1] Hazardman – http://hazardman.act.gov.au/

[2] Dumb Ways to Die – http://dumbwaystodie.com/

[3] http://en.wikipedia.org/wiki/Risk_compensation

[4] http://www.oxforddictionaries.com/definition/english/initiative

[5] http://www.oxforddictionaries.com/definition/english/wrong

[6] https://safetyrisk.net/binary-opposites-and-safety-goal-strategy/

[7] WHS Act and Regulations – Section 18

[8] WHS Act and Regulations – Section 18

[9] http://www.healthandsafetyhandbook.com.au/how-the-hierarchy-of-control-can-help-you-fulfil-your-health-and-safety-duties/

[10] https://training.gov.au/Training/Details/DEF42312

[11] https://www.osha.gov/SLTC/etools/safetyhealth/comp3.html

[12] http://en.wikipedia.org/wiki/September_11_attacks

[13] http://www.theguardian.com/world/2011/sep/05/september-11-road-deaths

[14] http://www.theguardian.com/world/2015/mar/26/germanwings-crash-raises-questions-about-cockpit-security

[15] https://www.osha.gov/SLTC/etools/safetyhealth/comp3.html

[16] http://en.wikipedia.org/wiki/Hubris

[17] http://www.rms.nsw.gov.au/roadsafety/downloads/shared_zone_fact_sheet.pdf

ALEXANDRE ROGERIO ROQUE says

Analyzing the cognitive process makes sense, the brain does not like uncertainty, (This generates Cortisol and bad stress in the long term) it will seek to balance itself (Homeostasis). the problem is where that balance point will be, since this decision is usually unconscious. If it is a routine activity with high exposure to risk, over time he will seek this balance to eliminate Cortisol from the system and can do without evaluating the risk!

rob long says

I find it interesting how many safety theories are made ‘fact’ too. How many safety icons, myths, symbols and theories are made fact when they too are mostly just theory at best?

How funny that root cause, coloured matrices, hierarchies of control are made fact when they are not. … and here were are getting cautious about risk homeostasis when safety peddles more mumbo jumbo as fact than a witch doctor convention.

Riskcurious says

I think all theories and models should be understood in the context of both what they can offer and their limitations. I’m not sure that a “lesser of two evils” argument is particularly useful – if we don’t critically evaluate the theories, how are we even supposed to judge which are better? That seems to just open us up to judgements based purely on face validity, which is likely to just result in adopting the models as fact those exact models that have been used as examples of worse than RHT.

Riskcurious says

I have to say, the analogy of “Twinkle Twinkle” made me laugh a little as I immediately thought of the outcome being Mozart’s variations on “Ah, vous dirai-je, Maman”… which is probably not the negative outcome I was supposed to conjure up (of course I am fully aware that Mozart was not forced to only play Twinkle Twinkle but I can only imagine the variations that might have resulted if he was. I am also not I suggesting that we should place such restrictions on people to see what their creativity can achieve). It just made me laugh.

On a more serious note though, whilst I agree that compensatory behaviours needs to be considered when dealing with problems of risk control, I think we need to be a bit cautious of risk homeostasis theory for a few reasons. Firstly, the theory itself was that just, it was not claimed to be supported by sufficient empirical evidence (and since then, those arguing for the theory tend to cherry pick the evidence to support their views). Secondly, the theory would have to accommodate the context- and content-dependent nature of risk decisions, which even if it were technically correct, would pretty much render it unusable from a practical perspective. It also makes it near impossible to properly validate through research. Just to be clear, this isn’t saying that people don’t compensate for risk controls, just that the nature of the equation may not be one quite so clear cut as risk homeostasis theory tends to suggest.

Admin says

Of course it is just a theory and not a panacea – I would be happy if people just used the premise to initiate some critical thought and discussion around the potential byproducts/risk shifts of their controls rather than blind obeyance of the hierarcy and the warm, fuzzy feeling of ticking off another hazard

Riskcurious says

Ah yes, that is pretty much all I was saying. Most definitely think about potential for compensatory behaviours and other by-products, but don’t get too caught up in risk homeostasis as a literal thing and start trying to find “target risk” levels etc, as it probably won’t provide much additional benefit.

Admin says

Yeah agreed, using a term like “target risk” is certainly going to send crusaders and engineers off on wacky trajectory searching for and measuring a magic number ……. “target risk zero”

Jaime Lopez says

Awesome reading. I thought I was the only one who thought this way. As a Environmental Scientist who also manages safety and health it seemed to me that the way the Theory of Chaos was explained in Jurassic Park (movie one) was what a safety program has to deal. Now I know this is actually called Risk Homeostasis. Now I have a name for it, and I know I’m not crazy. Thank You very much.

Admin says

Thanks Jaime – for many years I couldnt figure out why the harder we tried to control and improve safety the worse it became – I was likewise relieved and enlightened to discover the theory of Risk Homeostasis – double edged sword but, on one hand it gave it a name but also made me realise what a wicked problem it really is